To copyright or not to copyright: the big GenAI question

Should AI generated content enjoy fair use or should human prompters be compensated? It depends, says S. Alex Yang

In 30 Seconds

Copyright laws on AI generated content vary significantly from country to country

Incentivising human creators to continue producing training content will be critical moving forward

Copyright decisions should be predicated on two things: availability of training data and how competitive the AI market is as new players enter

Back in December of 2023, The New York Times sued OpenAI and Microsoft in a landmark case for copyright infringement, contending that the tech companies were liable for “billions of dollars in statutory and actual damages” for the unauthorised use of proprietary content to train AI models. Millions of its articles had been repurposed, said The Times, to train “automated chatbots that now compete with the news outlet...”

The same year, Beijing’s Internet Court tried a case brought by an digital artist whose work had been reproduced without permission on an online platform. Unlike the Times’ content, the work in question had been generated using Stable Diffusion, a test-to-image generative AI model. Here, the plaintiff asserted intellectual ownership and conceptual originality, arguing that the acuity of his prompts and editing skills made him the “owner” of the work and therefore entitled to copyright protection.

In their decision, the Court ruled in favour of the plaintiff, making a significant development in China’s evolving legislation around the copyrightability of AI-generated content. Interestingly, in September of 2023, the US Copyright Office again rejected a claim by artist Jason Allen to copyright an award-winning work he had made using the generative AI tool Midjourney.

These and other cases underscore the complexities of copyright regulations in the era of generative AI. Should AI training be considered fair use, allowing the use of copyrighted material without permission or compensation to the original creator? Or should human content creators be compensated when their work is used to train AI models? Furthermore, what protections should apply to content generated mainly or entirely by AI?

Weighing in on all of this is new research by S. Alex Yang, Professor of Management Science and Operations at London Business School. Together with Angela Huyue Zhang of the University of Southern California, he developed an economic model that takes a clear-eyed look at the pros and cons both of fair use and AI copyrightability. How these critical issues should be decided, says Alex, depends largely on two things: the availability of quality training data, and how competitive the AI developing market is.

“The future development of AI – and its broader societal impact – will depend on the creation of sound economic mechanisms that align the incentives of all stakeholders, from developers to content creators”

“AI development relies heavily on original work for training large language models (LLMS), so we need to think about how we incentivise human creation, especially when such training data is scarce,” explains Alex. “At the same time, the competitive dynamics of the AI industry play a crucial role. In highly competitive markets, firms are more motivated to innovate and invest in sustainable data acquisition strategies.”

As long as original training data remains abundantly available, there’s little benefit for AI firms to go out of their way to compensate the original creators. In the setting generative AI for videos, for example, says Alex, a more permissive fair use framework accelerates model development, yielding benefits for all: more revenue for AI companies and content creators, and enhanced outputs for end users.

Granting broader copyright protection to AI-generated content in this scenario also makes sense, especially when the AI development market is competitive, as higher AI copyrightability can stimulate demand of AI product, encouraging innovation. There is a caveat, however: “in markets with limited competition, such protections may disproportionately benefit dominant tech firms, potentially at the expense of consumers and the pace of model advancement.”

What happens when original data becomes scarce?

This all changes when original, human-created data starts to dry up, says Alex. And this is already happening in the world of text.

“Models like ChatGPT have devoured so much historical data that future iterations – GPT 5, 6 and so on—will increasingly depend on fresh content, be that content solely created by humans, or synthetic data carefully curated under human guidance.”

In such a context, incentivising human creators to keep on creating fresh content becomes critical. Failure to compensate creators through adequate copyright mechanisms could slow AI development, says Alex.

And switching out human or hybrid content for pure machine generated data isn’t a viable solution, at least not yet, he warns. “Studies have shown that training AI models exclusively on AI-generated data can degrade model performance to the point of failure. So at least in the near future, we will always need humans in the loop, be they sole creators, co-creators or providing feedback to the machine.”

Running their model through a low data availability scenario, Alex and Angela find that permissive fair use standard and expansive copyright protection for AI content engender certain risks. Crucially, these two policy levers don’t operate in isolation – they interact in ways that regulators must consider carefully.

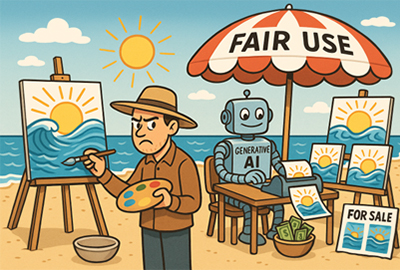

This illustration was carefully prompted by humans but generated by ChatGPT. Based on the policy recommendation in this article, it should enjoy partial copyright protection.

“If fair use standard is too lenient, it erodes incentives for human creators to produce new content, further narrowing an already strained data pipeline,” explains Alex. “And if AI-generated content is granted strong copyright protection, humans are more likely to adopt AI tools for content creation, doubling down the data availability challenge.”

“You need that fresh training data from human beings. So, if you want to grant more copyright to AI created content, you must also strengthen mechanisms that compensate humans for their original contributions,” Alex argues. “This becomes even more crucial as competition intensifies as demand for fresh data will increase.”

This is a likely scenario, says Alex, as new players from China and elsewhere emerge.

One size does not fit all

For regulators, designing a policy environment that can accommodate an ever-growing appetite for data will require a deep understanding of how different copyright levers interact. The challenge lies in developing regulatory frameworks that are both flexible and context specific. A one-size-fits-all approach is unlikely to succeed; instead, a case-by-case approach may prove to be the most effective way forward.

Meanwhile, businesses should pay close attention to the evolving regulatory landscape, says Alex.

“For organisations accelerating their adoption and development of generative AI, the best is to advocate for more agile and responsive regulation, frameworks that can keep pace with technological change,” he advises. “Equally important is being prepared to navigate an increasingly fragmented and diverse regulatory environment across jurisdictions, for they have different regulatory priorities.”

The trade-offs highlighted in this research extend well beyond regulation, says Alex. The future development of AI – and its broader societal impact – will depend on the creation of sound economic mechanisms that align the incentives of all stakeholders, from developers to content creators.

“If artists and writers see a clear, fair return from the use of their creative fingerprints, they’re more likely to engage with the ecosystem—and keep feeding it with the high-quality input that AI systems depend on”

Recognising growing resistance from original content creators, some AI companies have begun signing licensing agreements with major media outlets and content platforms, such as Financial Times and Reddit. These deals signal an emerging willingness to share value.

Another approach has been to place limits on the type of content AI tools can produce. For instance, many generative models will refuse to replicate music or art in the style of specific creators. While this reduces legal exposure, it still falls short of actively incentivizing original creators.

A more effective and sustainable solution, suggests Alex, may lie in developing value-sharing mechanisms that allow creators to directly benefit when their unique styles or ideals contribute to AI-generated outputs.

“If artists and writers see a clear, fair return from the use of their creative fingerprints, they’re more likely to engage with the ecosystem—and keep feeding it with the high-quality input that AI systems depend on.”

Incentivising human creators

Being more responsible in sourcing training data could yield important strategic benefits for AI developers. More recently, OpenAI accused Chinese AI company DeepSeek of using ChatGPT output to build their own model through a process known as model distillation. Given OpenAI’s own opaque training practices, enforcing such boundaries is difficult but important.

“If model distillation is proven to be a technically effective approach for developing economically efficient AI models,” says Alex, “frontier AI developers may explore ways to monetize this activity.”

Establishing norms or licensing frameworks for the use of AI-generated outputs could help balance cutting-edge innovation and cost-efficient mass adoption of AI.

Looking ahead, building a sustainable AI ecosystem will require more than just technical breakthroughs. It will depend on ensuring that human creators benefit not only from AI as a productivity tool, but also from their ongoing role as indispensable sources of training data. Without meaningful incentives, the creative engine that powers generative AI risks stalling.

Further details on the research can be found here.

Discover fresh perspectives and research insights from LBS